In the ever-evolving landscape of artificial intelligence, OpenAI has once again grabbed headlines by releasing its new o1-preview model. As the dust settles on this latest unveiling, a critical question emerges: Does OpenAI's latest language model truly justify the hype – or more importantly, its hefty price tag?

Here's the breakdown for 1M tokens (as of 13/09/2024):

| Model | Input | Output |

|---|---|---|

| gpt-4o | $5.00 | $15.00 |

| gpt-4o-2024-08-06 | $2.50 | $10.00 |

| gpt-4o-2024-05-13 | $5.00 | $15.00 |

| gpt-4o-mini | $0.15 | $0.600 |

| gpt-4o-mini-2024-07-18 | $0.15 | $0.600 |

| o1-preview | $15.00 | $60.00 |

| o1-preview-2024-09-12 | $15.00 | $60.00 |

| o1-mini | $3.00 | $12.00 |

| o1-mini-2024-09-12 | $3.00 | $12.00 |

OpenAI's pricing wasn't very pocket-friendly in the first place, o1 prices are just bonkers. I'm sure those will come down eventually, but for now, I'm going to stick to Anthropic's Claude models.

TL;DR: The Short Answer is Still No

While the o1-preview model does bring improvements to the table, I think that these advancements, while noteworthy, fall short of justifying the model's exorbitant cost – a staggering 100 times more expensive than gpt-4o-mini. Most of us are barely scratching the surface of our use cases for current models. OpenAI's mission is AGI (or "true" AI) so they don't care as much about becoming profitable.

The sad reality of this AI arms race is simple: Whoever gets there first, will do everything possible to stop others from getting there. It's not something that will be released to the public as API... It will be treated on the same (if not higher) level of secrecy as nuclear weapons. There will be no open-source AGI. Ever.

Unpacking "Strawberry": What's New?

At its core, the o1-preview model uses a form of self-prompting called "Chain of Thought" (CoT) reasoning. This technique, first introduced in a paper published at NeurIPs in January 2022, allows the model to "think through" or iterate on thoughts, simulating a more human-like reasoning process.

OpenAI describes this as enabling their model to tackle more complex tasks by breaking them down into smaller, manageable steps. In theory, this should lead to more accurate and nuanced responses, especially for problems that require multi-step reasoning.

Claims vs. Reality

OpenAI's release announcement paints an impressive picture of o1-preview's capabilities:

- Advanced Reasoning: OpenAI claims the model can "spend more time thinking through problems before they respond, much like a person would."

- Benchmark Performance: According to OpenAI, the next model update performs similarly to PhD students on challenging tasks in physics, chemistry, and biology.

- Mathematical Prowess: In a qualifying exam for the International Mathematics Olympiad (IMO), the reasoning model reportedly scored 83%, compared to GPT-4o's 13%.

- Coding Capabilities: OpenAI states the model reached the 89th percentile in Codeforces competitions.

- Safety Improvements: The company claims significant advancements in model safety, with o1-preview scoring 84 out of 100 on a jailbreaking test, compared to GPT-4o's 22.

However, independent testing and analysis reveal a more nuanced picture. We have seen in the past how models get "dumber" over time as they get lobotomized. We wouldn't want the average Joe learning the truth about certain things, right? ;)

Better, But Not 100 Times Better

There's no denying that the o1-preview model shows improvements in certain areas. During testing, it demonstrated an ability to work through complex logical problems, such as the "Three Gods" riddle, by methodically considering each possibility and its implications.

However, the model still struggles with seemingly simpler tasks. For instance, it failed to generate a coherent 10-word sentence with incrementally longer words – a task that human testers accomplished with relative ease. It also struggled with a systems thinking problem about city bus routes, timing out without providing a solution.

These mixed results paint a picture of a model that, while more capable in some respects, is far from the quantum leap forward that its price point might suggest.

The Price: A Major Sticking Point and Hidden Costs

The most glaring issue with the o1-preview model is its cost. At $15 per million input tokens, it's a staggering 100 times more expensive than OpenAI's 4o-mini model, which costs $0.15 per million tokens (and 6x more expensive than 4o). However, the true cost may be even higher when we consider the nature of the model's "reasoning" process.

The Hidden Token Usage

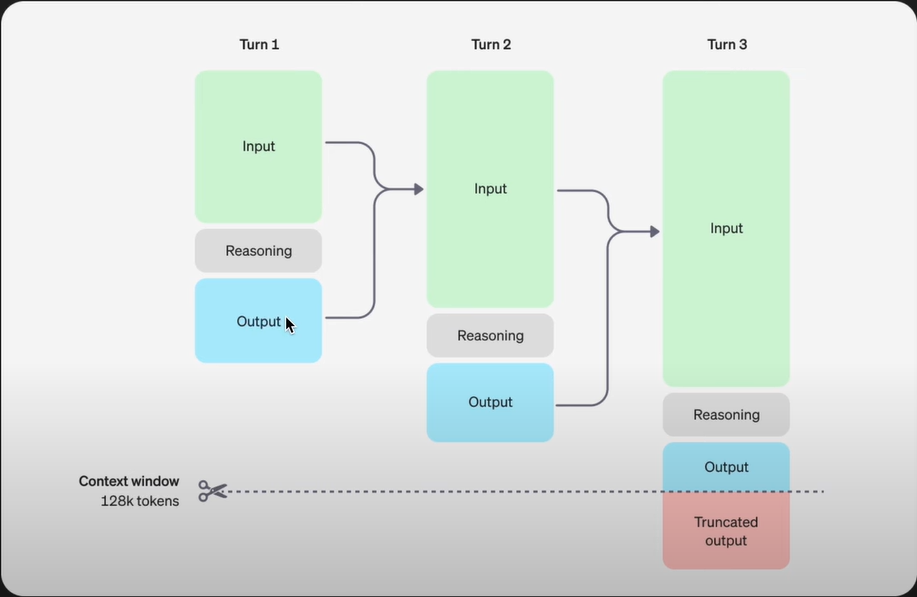

An overlooked aspect of the o1-preview model's operation is its token usage during the reasoning process. To "reason," the model performs multiple steps, analyzing its output, adding a new conclusion, and analyzing it again.

This process, while potentially leading to more thoughtful responses, comes with a significant caveat:

- Increased Token Consumption: Each step in the reasoning process consumes tokens, potentially many more than a single, straightforward response would.

- Lack of Visibility: Users don't have visibility into this internal reasoning process, making it difficult to optimize or control token usage or prompt refinement.

- Unpredictable Costs: The output size may vary depending on the complexity of the task, leading to unpredictable and potentially much higher costs for users.

- Less User Control: Unlike manual prompt refinement, where users can see and control each step, the o1-preview's internal reasoning happens in a black box, reducing user control over the process and its associated costs.

This hidden token usage effectively multiplies the already high base cost of the model, making it even more expensive in practice than the sticker price suggests.

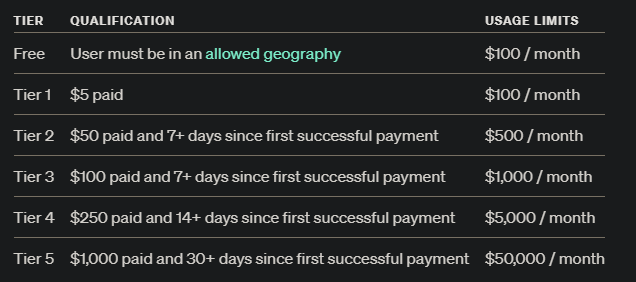

Prohibitive Access Tiers for API Usage

Adding insult to injury, OpenAI has implemented a tiered access system for the o1 model that further restricts its availability to developers and businesses.

To even qualify for using the o1 model via API, users must reach Tier 5, which requires a staggering $1,000 paid and 30+ days since the first successful payment. This tier allows for a usage limit of $50,000 per month. The lower tiers, ranging from free access to $250 paid and 14+ days of account age, have significantly lower usage limits and do not grant access to the o1 model. This tiered system effectively creates a massive barrier to entry, excluding smaller developers, startups, and researchers who might benefit from experimenting with the model but cannot justify such a substantial financial commitment.

This pricing structure not only reinforces the model's positioning as an exclusive, high-end product but also raises questions about OpenAI's commitment to democratizing AI access. By setting such high financial barriers, OpenAI is essentially limiting the model's use to large corporations and well-funded institutions, potentially stifling innovation and diverse applications of the technology.

Implications for Censorship and Model Behavior

The internal reasoning process o1-preview also raises concerns about potential censorship and further "lobotomization" of the model:

- Enhanced Content Filtering: The multi-step reasoning process could allow for more sophisticated content filtering, potentially censoring or altering responses in ways that are not transparent to the user. But you know... it's all for your "safety".

- Bias Amplification: If biases exist in the model, the iterative reasoning process could potentially amplify these biases, leading to more skewed outputs.

- Lack of Transparency: The opacity of the reasoning process makes it difficult for users to understand or challenge the model's decision-making process, especially in cases where censorship or bias might be a concern.

- Potential for Overcorrection: In an attempt to make the model "safer," the internal reasoning steps might lead to overcorrection, resulting in overly sanitized or less nuanced responses.

Reevaluating the Value Proposition

Given these considerations, the value proposition of the o1-preview becomes even more questionable:

- True Cost Calculation: Users need to consider not just the base token rate, but also the potential multiplication of token usage due to the internal reasoning process.

- Transparency Concerns: The lack of visibility into the reasoning process raises questions about the trustworthiness and reliability of the model's outputs.

- Control vs. Convenience: While the model promises more sophisticated reasoning, it comes at the cost of user control and transparency, a trade-off that may not be acceptable for many applications.

- Ethical Considerations: The potential for enhanced censorship and bias amplification adds an ethical dimension to the decision to use this model.

This reevaluation of the price and its implications further underscores the need for careful consideration before adopting o1-preview, especially for applications where transparency, control, and cost-effectiveness are crucial factors.

Alternatives: Achieving Similar Results for Less

One of the most compelling arguments against the necessity of OpenAI's latest offering is the existence of alternatives that can achieve similar, if not better, results at a fraction of the cost.

Tools like LangChain or CrewAI have been implementing Chain-of-Thought (most commonly called "agents") reasoning and other advanced prompting techniques for over a year. These open-source solutions offer developers much more control and flexibility, allowing for the creation of sophisticated AI systems without the need for a single, monolithic (and expensive) model.

The o1-mini: A More Practical Option?

Recognizing the potential cost barrier, OpenAI has also introduced o1-mini, a smaller, faster, and cheaper version of the reasoning model. At 80% less expensive than o1-preview, it's positioned as a more cost-effective solution for developers. However, it's worth noting that even at this reduced price, it's still significantly more expensive than many existing alternatives.

What This Says About OpenAI

The release of o1-preview and o1-mini seems to signal a shift in OpenAI's strategy. Once at the forefront of AI research, the company appears to be pivoting towards a more commercial, product-oriented approach. This shift is reflected in the exodus of researchers from the company and the increasing emphasis on integrating existing technologies rather than pioneering breakthroughs.

Critics argue that OpenAI is essentially commercializing techniques that have been in the public domain for years, without adding substantial innovations of their own. The use of cryptic project names like "Strawberry" and "Orion"the may be seen as an attempt to create an aura of innovation around what are essentially incremental improvements.

The Bigger Picture: Industry Trends and Future Directions

As the dust settles on the o1-preview release, it's clear that the path forward in AI development may not lie in increasingly large and expensive models. Instead, the future seems to be trending towards more modular, flexible, and efficient approaches:

- Multi-Agent Frameworks: Tools like Microsoft's AutoGen and AgentForge represent a more adaptable and cost-effective approach to AI implementation.

- Specialization: Rather than one-size-fits-all models, we're likely to see more specialized AI tools designed for specific tasks or industries.

- Open Source Development: The success of open-source models and tools suggests a future where innovation is driven by collaborative efforts rather than closely-guarded proprietary technology. Or that's what many of us are hoping for...

- Efficiency and Cost-Effectiveness: As the novelty of generative AI wears off, the focus will shift to creating systems that are not just powerful, but also practical and economically viable.

The Data Play: A Hidden Agenda?

An often overlooked aspect of AI model releases is the potential for data gathering. This release of o1-preview may serve a dual purpose for OpenAI: not just as a product offering, but as a sophisticated data collection mechanism (not counting maintaining the hype for future investors).

The Data Hunger of AI Models

It's crucial to understand that the current biggest challenge in AI training is data. Most large language models, including those from OpenAI, have already digested nearly all the written text humanity has ever created that's freely (and not so freely....) available on the internet. This presents a significant problem: how do you continue to improve AI models when you've exhausted your primary data source?

OpenAI's Potential Strategy

By releasing the o1-preview to the public, even at a high price point, OpenAI may be positioning itself to gather fresh, high-quality data in real-time. Here's how this strategy could work:

- Novel Interactions: Users interacting with o1-preview, especially those working on complex problems in science, math, and coding, are likely to generate novel conversations and problem-solving scenarios that don't exist in current datasets.

- High-Quality Data: Given the model's high cost, it's likely to be used primarily by professionals and researchers working on sophisticated problems, potentially generating high-quality, specialized data.

- Real-World Application: The data generated would reflect real-world applications and challenges, providing invaluable insights into how the model performs in practical scenarios.

- Continuous Improvement: This new data could be used to further train and refine the model, creating a feedback loop of continuous improvement.

- Diverse Domains: By making the model available across various platforms (ChatGPT Plus, Team, Enterprise, and API), OpenAI ensures a diverse range of use cases and domains, enriching their dataset.

Implications of This Approach

If this is indeed part of OpenAI's strategy, it raises several points for consideration:

- Ethical Concerns: There are potential ethical implications of using customer interactions to improve proprietary models, especially if this isn't made explicitly clear to users.

- Competitive Advantage: This approach could give OpenAI a significant edge in the AI race, as they would have access to fresh, real-world data that their competitors lack.

- Price Justification: The high price of o1-preview could be seen as a way to ensure the quality of the data generated, rather than just a reflection of the model's current capabilities.

- Long-term Strategy: This could be part of a longer-term strategy to create a self-improving AI system, where each interaction contributes to the model's growth and refinement.

A New Paradigm in AI Development?

If this hypothesis is correct, it suggests a shift in how AI companies might approach model development and deployment in the future. Rather than just products, these models become data collection tools, blurring the lines between product release and ongoing research and development.

This strategy, while potentially effective, also raises questions about transparency, data ownership, and the future of AI development. As users and developers, it's crucial to be aware of these potential dynamics when engaging with new AI models, especially those at the cutting edge of the field.

This could also be a step against open source models (we can already see OpenAI teaming up with the likes of Microsoft and Apple by effectively shutting down open source projects while pretending it's for our "safety").

Conclusion: Innovation, Integration, and Data

OpenAI's o1-preview model, while an interesting development, seems to represent more of an integration of existing technologies than a true breakthrough. Its prohibitive cost, combined with the availability of more flexible and cost-effective alternatives, makes it difficult to recommend for practical applications.

As the AI industry matures, the emphasis is likely to shift from headline-grabbing model releases to more nuanced, efficient, and practical AI solutions. For developers and businesses looking to leverage AI, the path forward may lie not in adopting the latest expensive model, but in creatively combining existing tools and techniques to create tailored, efficient AI systems.

In the end, while OpenAI's latest offering may not live up to its hype or justify its price for many users, it serves multiple purposes: as a showcase of incremental AI advancements, as a catalyst for important discussions about AI development, and potentially as a sophisticated data-gathering tool. As we move forward, the true innovations are likely to come not just from model improvements or new architectures, but from creative approaches to data acquisition, processing, and application in AI systems.