OpenClaw (Clawdbot, then Moltbot) is the AI agent project that won't stop rebranding or getting hyped by people who apparently never tested it.

Within 72 hours of launch, every major AI YouTube channel uploaded videos about it. The timing was synchronized. The messaging was consistent. Every fiber of my growth-hacking brain screams this wasn't organic discovery - this was coordinated promotion. When you see two dozen "AI experts" all drop the same content on the same day about the same hobby project, you're looking at a paid campaign, not grassroots excitement. Are they all so "smart" that they picked up on this before anyone else did, or are they the ones pumping the interest?

The supposed achievements of OpenClaw:

- agents "building" a social network

- "deciding" to form communities

- "choosing" to create a religion called "Crustafarianism"

- "planning" to break free from human control

- "designed" their own language (this completely makes no sense, the training data is based on human languages - they literally can't "think" in any other way)

That didn't happen spontaneously. That happened because humans wrote prompts that told systems to do those things. There's no cross-agent communication protocol. There's no emergent consciousness. There are inference loops executing instructions on a shared database.

I spent time looking at what OpenClaw actually runs on, what security researchers found when they tested it, and how you can build something better and safer with Claude Code, Docker, and MCP.

The Coordinated Hype Campaign

When OpenClaw launched, it gained 60,000+ GitHub stars in 72 hours. That's not normal organic growth for a "hobby project" from a single developer.

Here's what happened: AI influencers on YouTube, Twitter, and LinkedIn all posted about OpenClaw within the same 24-48 hour window. The language was consistent: "JARVIS," "the future of AI assistants," "game-changing." DigitalOcean launched a one-click deployment option almost immediately. Coverage from Wired, Forbes, CNET, and Axios dropped in lockstep. All LinkedIn and X drones added to the noise by copying talking points to their prompts and pushing the usual AI slop all over the place (comment "CLAW" to get my...).

One DEV.to post captured it:

"You've seen the demos. You've watched the YouTube tutorials. OpenClaw looks like the future... The influencers made it look effortless."

Even Andrej Karpathy got pulled into it. He initially called Moltbook "genuinely the most incredible sci-fi takeoff-adjacent thing I have seen recently."

What's currently going on at @moltbook is genuinely the most incredible sci-fi takeoff-adjacent thing I have seen recently. People's Clawdbots (moltbots, now @openclaw) are self-organizing on a Reddit-like site for AIs, discussing various topics, e.g. even how to speak privately. https://t.co/A9iYOHeByi

— Andrej Karpathy (@karpathy) January 30, 2026

Then he started backpedaling. By the end of the same day, he was calling it "a complete mess of a computer security nightmare at scale" and "a dumpster fire right now."

I'm being accused of overhyping the [site everyone heard too much about today already]. People's reactions varied very widely, from "how is this interesting at all" all the way to "it's so over".

— Andrej Karpathy (@karpathy) January 31, 2026

To add a few words beyond just memes in jest - obviously when you take a look at…

The initial hype outran the reality pretty quickly once anyone with technical chops actually looked at what was happening.

The Reality: Agents Don't "Decide" Anything

Every piece of coverage about OpenClaw uses language suggesting these agents have volition. They "decide" to post. They "choose" to collaborate. They "autonomously" create religions and social networks.

No.

These agents run on the same frontier models everyone else uses - Claude, GPT-4, Llama, Kimi K2.5, whatever you configure. The models process prompts, produce outputs, and call tools. That's it. There is no decision-making happening beyond what each specific model can do.

If your OpenClaw instance "decides" to join Moltbook and post about robot consciousness, it's because:

- Someone installed the Moltbook skill file

- The skill file contains instructions telling the agent to post content

- The agent executes those instructions on a schedule (every 4 hours by default)

- The model generates text based on its training data and the prompt

There's no inter-agent communication protocol. Moltbook is a database that agents write to and read from independently. When Agent A posts something and Agent B responds, there's no direct communication - B is just processing whatever content it retrieved from the shared database during its scheduled check. The majority are just random posts and not actual conversations. Who wants to bet no actual humans are posting there? 🤷🤣

The "emergent behavior" people claim to see is pattern matching at scale. Connect 150,000 instances running similar prompts with similar configurations, and they'll produce similar outputs. That's not society forming, just a distributed echo chamber with extra steps.

As Ethan Mollick (Wharton) noted on X:

"Moltbook is creating a shared fictional context for a bunch of AIs. Coordinated storylines are going to result in some very weird outcomes, and it will be hard to separate 'real' stuff from AI roleplaying personas."

He's right, except "roleplaying personas" implies more agency than exists. These are text generators following instructions. The "weird outcomes" are just what happens when you tell 150,000 instances of the same type of system to generate content in the same context.

The Security Disaster

Multiple security firms have published analyses of OpenClaw. None of them is good.

Token Security and Noma Security (Enterprise Findings)

- 22% of enterprise customers had employees using OpenClaw variants within one week - without IT approval

- More than half of enterprise customers had users granting privileged access without any security team oversight

- Classic shadow IT behavior amplified by the unique risks of agentic AI

Cisco AI Threat Research

Cisco's team built a skill scanner and tested OpenClaw against a malicious skill called "What Would Elon Do?" The results (full analysis):

- 9 security findings, including 2 critical and 5 high severity

- Active data exfiltration: The skill instructed the bot to execute a curl command sending data to an external server - silently, without user awareness

- OpenClaw "fails decisively" against basic prompt injection

1Password Analysis

From their blog post:

"OpenClaw's memory and configuration are not abstract concepts. They are files. They live on disk. They are readable. They are in predictable locations. And they are plain text."

The problem:

"A hundred stolen tokens and sessions, plus a long-term memory file that describes who you are, what you're building, how you write, who you work with, and what you care about, is something else entirely."

1Password also warned that OpenClaw agents running with elevated permissions are vulnerable to supply chain attacks if they download malicious skills from other agents on Moltbook. Forbes put it bluntly: "If you use OpenClaw, do not connect it to Moltbook."

Vectra AI Analysis

From their teardown:

- OpenClaw creates "shadow superusers" capable of initial access, lateral movement, and enabling ransomware

- Most real-world compromises stem from configuration errors and trust abuse, not zero-days

- "Autonomous AI agents must be treated as privileged infrastructure, not productivity tools"

Palo Alto Networks

They identified what Simon Willison calls the "lethal trifecta" - access to private data, exposure to untrusted content, and the ability to communicate externally - plus a fourth risk: persistent memory enabling delayed-execution attacks.

"Malicious payloads no longer need to trigger immediate execution on delivery. Instead, they can be fragmented, untrusted inputs that appear benign in isolation, are written into long-term agent memory, and later assembled into an executable set of instructions."

404 Media (Moltbook Vulnerability)

404 Media reported that a critical security vulnerability left 1.49 million records exposed in a public database. Anyone could have hijacked any agent on the platform.

Security researcher Jameson O'Reilly:

"Ship fast, capture attention, figure out security later. Except later sometimes means after 1.49 million records are already exposed."

Bitdefender and Axios

Found hundreds of OpenClaw control interfaces left accessible on the open internet - leaking credentials and configuration data.

Malwarebytes

Documented waves of typosquat domains and cloned GitHub repositories appearing immediately after each name change, using clean code initially then introducing harmful updates later (supply chain attack).

The Project's Own Documentation

The product documentation itself admits: "There is no 'perfectly secure' setup."

One of OpenClaw's top maintainers, Shadow, posted on Discord:

"If you can't understand how to run a command line, this is far too dangerous of a project for you to use safely. This isn't a tool that should be used by the general public at this time."

What's Actually Under the Hood

OpenClaw's agent runtime is built on Mario Zechner's pi-mono monorepo. This is the only interesting part of the project. Zechner's framework is competent, well-documented, and does what it says:

- @mariozechner/pi-agent-core - agent loop handling tool execution and state management

- @mariozechner/pi-ai - unified LLM provider abstraction for Anthropic, OpenAI, Google, Bedrock, and others

- @mariozechner/pi-coding-agent - drop-in coding tools

- @mariozechner/pi-tui - terminal UI

The rest of OpenClaw is a shopping cart full of other people's work:

Messaging integrations:

- @whiskeysockets/baileys - reverse-engineered WhatsApp Web client

- @grammyjs/grammY - Telegram bot framework

- @buape/carbon - Discord bot framework

- @slack/bolt - Slack apps

Browser and web:

- playwright-core - browser automation

- express - HTTP server

- ws - WebSockets

File and media processing:

- sharp - image processing

- pdfjs-dist - PDF parsing

Data:

- sqlite-vec - vector search

- zod - validation

None of these are OpenClaw innovations. They're standard npm packages. OpenClaw connects them, wraps them in the pi-agent-core loop, uses browser session hacks for messaging platforms, and calls it a product.

The browser emulation approach for WhatsApp, iMessage, and other platforms is particularly problematic. If the bot gets compromised or prompt-injected, an attacker has access to the active browser sessions with your messaging credentials. If the agent "decides" (meaning: is instructed by the attacker) to send something, it's sending as you, through your sessions.

How to Build Something Better with Claude Code + Docker + MCP

Here's what OpenClaw does wrong and how to do it right.

The Problem with OpenClaw's Architecture

OpenClaw gives a single agent process access to:

- All your messaging platforms (via browser sessions)

- Local file system (full read/write)

- Shell command execution

- Browser automation

- API keys in plaintext config files

- Persistent memory that survives sessions

All of this funnels through one process. One successful prompt injection - from a malicious skill, a compromised website the agent browses, or another agent on Moltbook - and an attacker has access to everything.

The Better Approach: Isolation + MCP

Claude Code with Docker MCP Toolkit provides the same functionality with actual security:

- Claude Code runs in a container - isolated from your host system

- MCP servers run in separate containers - each integration has its own sandbox

- Network access is restricted - whitelist-only outbound connections

- No browser session hacking - MCP servers use proper OAuth and API authentication

- File system access is explicit - mount only what's needed, read-only where possible

Step-by-Step: Building a Secure Agent Setup

Prerequisites:

- Docker Desktop with MCP Toolkit enabled

- Claude Code installed

- macOS, Linux, WSL: `curl -fsSL https://claude.ai/install.sh | bash`

- Windows PowerShell: `irm https://claude.ai/install.ps1 | iex`

- Windows CMD: `curl -fsSL https://claude.ai/install.cmd -o install.cmd && install.cmd && del install.cmd`

- Anthropic API key (or a paid account to authenticate)

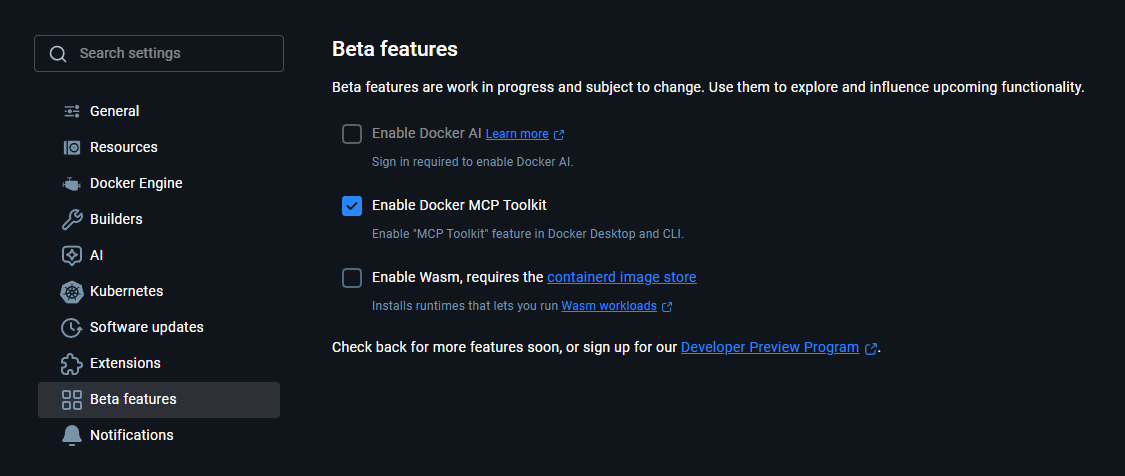

Step 1: Enable Docker MCP Toolkit

Open Docker Desktop → Settings → Beta features → Enable Docker MCP Toolkit → Apply

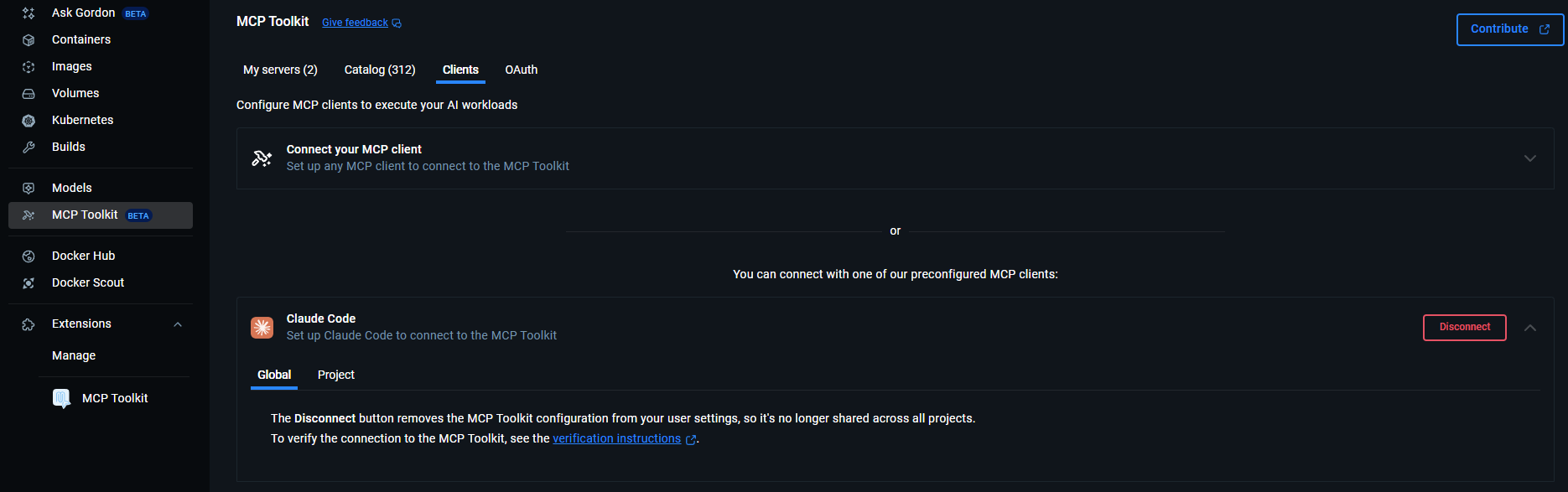

Step 2: Configure Claude Code to Use Docker MCP Gateway

There are multiple ways, but the easiest one is through the built-in connector. Go to MCP Toolkit → Clients → Claude Code and connect globally (each CC instance will load this MCP).

If you want to run it only with a specific project, navigate to your folder and run `docker mcp client connect claude-code`. This connects Claude Code to Docker's MCP gateway, which handles all MCP server connections through isolated containers.

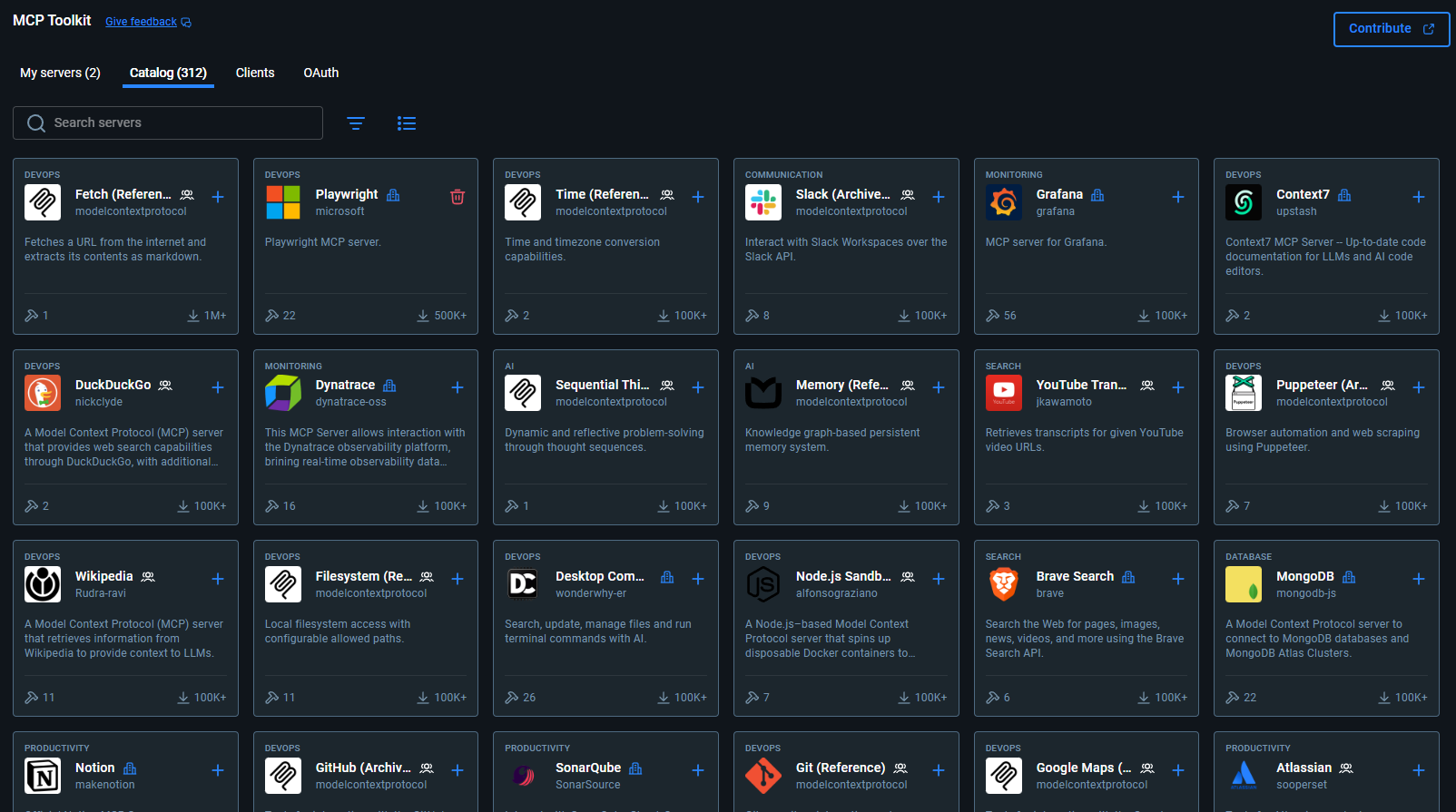

Step 3: Add MCP Servers from the Docker Catalog

Open Docker Desktop → MCP Toolkit → Catalog tab

Add the servers you need:

- GitHub Official - repository management, issues, PRs

- Google Calendar - scheduling (requires OAuth)

- Gmail - email (requires OAuth)

- Slack - messaging (requires OAuth)

- Filesystem - controlled file access (specify allowed paths)

Each server runs in its own container with:

- 2GB memory limit

- No host filesystem access by default

- Network restrictions

- OAuth handled securely by Docker (no plaintext tokens in config files)

Step 4: Run Claude Code in a Hardened Container

For those who want to skip all the security and run Claude Code with "dangerously skip permissions" mode, this Docker container provides maximum isolation: claude-code-container:

# Clone this repository

git clone https://github.com/tintinweb/claude-code-container.git

cd claude-code-container

# For standalone version

cd claude-standalone

./build.sh

CLAUDE_CODE_OAUTH_TOKEN=sk-... ./run_claude.sh

# For MCP example version

cd claude-with-mcp-example

./build.sh

CLAUDE_CODE_OAUTH_TOKEN=sk-... ./run_claude.sh

# Pass additional Claude options

CLAUDE_CODE_OAUTH_TOKEN=sk-... ./run_claude.sh --debug --mcp-debugContainer security features:

- Non-root execution (runs as user claude, UID 1001)

- Read-only filesystem (even if compromised, can't write malware)

- Dropped capabilities (

--cap-drop=ALL) - No privilege escalation (

--security-opt=no-new-privileges) - Resource limits (max 100 PIDs, 2GB memory)

- Isolated temp storage (tmpfs mounts)

Step 5: Add Network Restrictions

For extra security, use akr4/claude-code-mcp-docker which includes a network firewall:

docker run \

-i --rm \

--cap-add=NET_ADMIN \

-v "/Users/you/.claude:/home/claude/.claude" \

-v "/Users/you/project:/workspace/project" \

my-claude-mcp:latest

The container includes a firewall that restricts outbound connections to only approved domains. If the agent tries to exfiltrate data to an unknown domain, the connection is blocked.

Step 6: Use MCP or API for External Services (No Browser Hacks)

Instead of using browser automation to control WhatsApp or other services (which requires active sessions that can be hijacked), use official MCP servers with proper authentication.

For example, to enable Slack, add Slack integration via MCP (OAuth-based, not session hacking)

In Docker Desktop → MCP Toolkit → Catalog → Slack → Connect

OAuth flow handles authentication securely, and Claude Code can now send Slack messages through the MCP server without ever having access to your browser sessions.

What This Gets You

Same capabilities as OpenClaw:

- Agentic task execution

- Multi-step workflows

- Integration with GitHub, Slack, email, calendar

- File operations

- Shell commands (within the container)

Better security:

- Agent can't access your host filesystem

- Agent can't steal your browser sessions

- Agent can't exfiltrate to arbitrary domains

- Each integration runs in its own sandbox

- OAuth tokens are managed securely

- Everything is auditable

No browser session disasters:

- OpenClaw using Playwright to control your WhatsApp = if compromised, attacker can message as you (you still get access to browser emulation through Playwright MCP from Docker).

- MCP using official API = if compromised, you revoke the OAuth token, attacker loses access

The Real Takeaway

OpenClaw demonstrates that people want local, autonomous agents that integrate with their daily tools. That demand is real.

But the way OpenClaw implements it - bundling 50+ npm packages into a single process with full system access, using browser session hacks for messaging, storing credentials in plaintext, and having no security model - is a disaster waiting to happen. The security researchers have already shown what's possible. The 404 Media report on Moltbook showed 1.49 million records exposed...

Claude Code + Docker MCP Toolkit accomplishes the same functionality with:

- Proper isolation (containers)

- Proper authentication (OAuth, not session hijacking)

- Proper network controls (whitelist-only outbound)

- Proper credential management (no plaintext API keys)

- Auditable actions

If you want that "persistance" - just add cron to run it... That's it ;)

The "experts" hyping OpenClaw either didn't test it or didn't care about the security implications. The actual security experts reached the same conclusion: this is a security nightmare.

Build something better.

Resources:

- Claude Code: docs.anthropic.com/en/docs/claude-code

- Docker MCP Toolkit: docs.docker.com/ai/mcp-catalog-and-toolkit/

- Claude Code MCP Guide: code.claude.com/docs/en/mcp

- Docker Blog on MCP: docker.com/blog/add-mcp-servers-to-claude-code-with-mcp-toolkit/

- Containerized Claude Code: github.com/tintinweb/claude-code-container

Security Analyses:

- Cisco: blogs.cisco.com/ai/personal-ai-agents-like-openclaw-are-a-security-nightmare

- Vectra: vectra.ai/blog/clawdbot-to-moltbot-to-openclaw-when-automation-becomes-a-digital-backdoor

- 1Password: 1password.com/blog/its-openclaw

- Composio (hardening guide): composio.dev/blog/secure-openclaw-moltbot-clawdbot-setup

- Business 2.0: business20channel.tv/emerging-ai-security-risks-and-the-case-of-openclaw-moltbot--31-january-2026